Developer's Guide to Guided Prompts for AI Code

Introduction: Beyond Basic Prompts - The Shift to Prompt Engineering

Let’s be real. You’ve asked an AI to write a script, and it churned out something… almost right. It worked, but it was awkward. Or it hallucinated a feature that doesn’t exist. Or it produced a snippet so inefficient it would make even the most seasoned developer weep. We've all been there, stuck in a cycle of re-prompting and manual fixes, wondering if these powerful tools are really saving us any time at all.

My name is Alex, and I’ve spent the last decade as a software engineer, navigating the transition from raw code to AI-assisted development. I lead a team where efficiency and code quality are everything, and I’m here to tell you that the difference between an infuriating AI interaction and a brilliant one isn’t the model—it’s the instructions you provide.

I experienced this lesson the hard way on a recent project. We needed to parse and transform several gigabytes of messy CSV data. My initial, simple request—"Write a Python script to clean this data"—led me to a basic script that used nested for loops. It worked, but it was painfully slow, taking an 8-hour run time. Frustrated, I tried again with a structured, guided request. I instructed the AI as a 'Senior Data Engineer', using the pandas library, employing vectorized operations instead of loops for performance, and including specific error handling for missing columns. The result was a clean, idiomatic script that processed the entire dataset in under 10 minutes. That's when it clicked: we need to stop making simple requests and start writing detailed specifications. This is the shift from basic prompting to prompt engineering.

This article is your guide to making that shift. Forget guesswork and endless revisions. You're about to learn a systematic, engineering-based approach to prompting that will help you generate reliable, high-quality, and production-ready code every single time.

The Problem with 'Lazy' Prompts: Why Your AI Coding Assistant Disappoints You

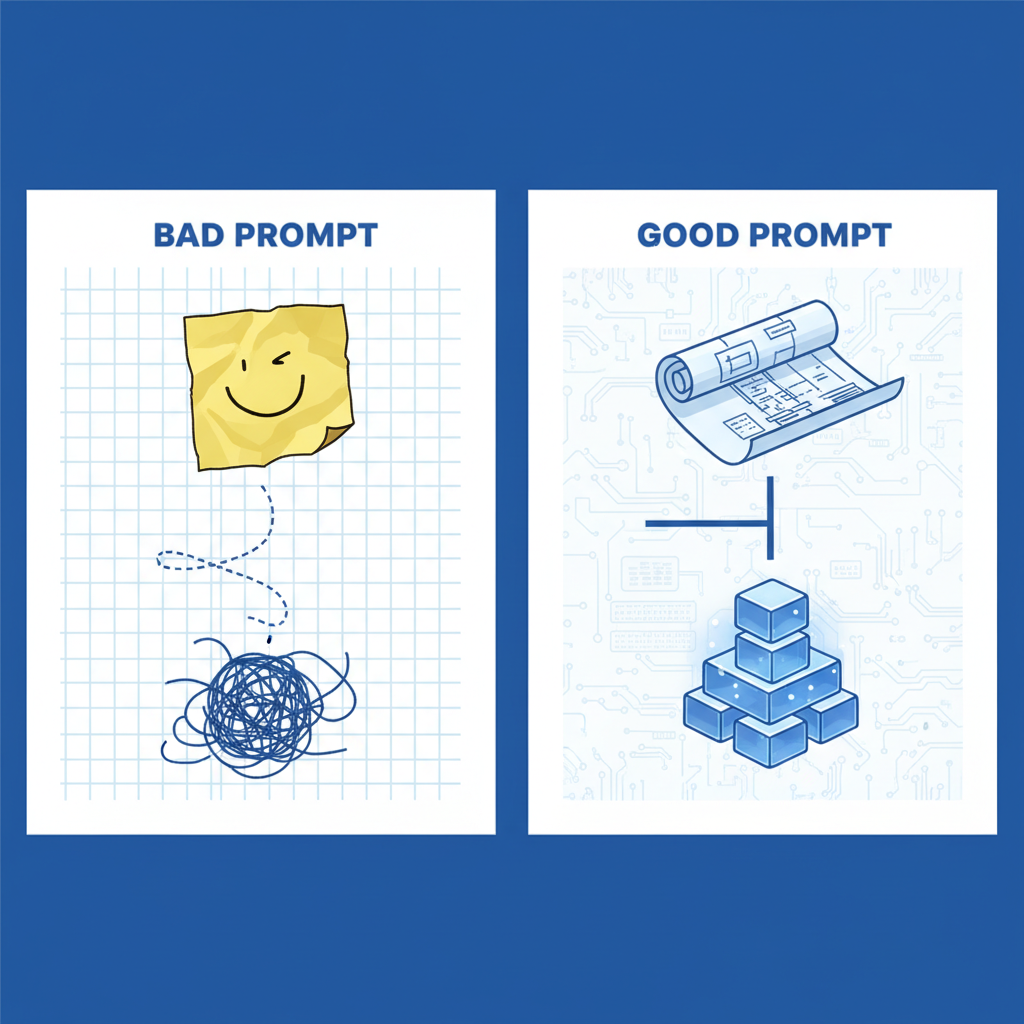

We've all been there. When you're facing a coding challenge and turn to your AI assistant, you type something like "write a script to process user data." What you get back is frustratingly mediocre code that might run technically but lacks quality, uses outdated libraries, or completely misses the architectural style of your project. Even worse, it might introduce subtle security flaws or use inefficient algorithms that would never pass a code review.

These "lazy prompts" treat the AI like a magic black box, and the results are predictably disappointing.

You end up spending more time debugging and refactoring the AI's output than it would have taken to write the code yourself. This happens because low-context, ambiguous prompts force the AI to make assumptions about your needs, leading to common problems:

-

Inconsistent Coding Styles: The AI generates code that doesn’t match your project’s formatting, naming conventions, or design patterns.

-

Lack of Contextual Understanding: The AI fails to understand the specific requirements and context of your project, resulting in suboptimal solutions.

-

Security Risks: It could create code vulnerable to common attacks such as SQL injection or cross-site scripting (XSS), due to lack of security prioritization.

-

Inefficient Algorithms: Without limitations, the AI frequently opts for the simplest, most obvious solution rather than the most efficient one.

-

'Hallucinated' Syntax: In certain instances, the AI confidently invents functions, methods, or even entire libraries that don’t exist, leaving you to debug phantom code.

What Are Guided Prompts? From Vague Requests to Precise Specifications

A Guided Prompt is less of an inquiry and more of a technical specification. It’s an engineered set of instructions that provides all the context, constraints, and examples necessary for an AI model to generate code that is not only functional but also robust, efficient, and aligned with your project's standards. Imagine it as the difference between telling a junior developer “build a login page” and giving them a detailed ticket with user stories, acceptance criteria, and design mockups.

This approach is based on foundational concepts in AI research, such as Chain-of-Thought (CoT) prompting. First introduced in a 2022 paper from Google Research (Wei et al., 2022), CoT was a breakthrough that discovered you could get more accurate results from a large language model by asking it to explain its reasoning step-by-step. By showing the AI’s work, it is forced to follow a more logical path to the solution. When applied to code, we are essentially defining that logical path for the AI in advance.

DEFINITION BOX: Key Prompting Terms for Developers

- Zero-Shot Prompting: This is the most basic form of prompting. You ask the AI to do something without providing any prior examples. It’s the simplest but least reliable method. > * Example:

"Write a Python function to sort a list."

- Few-Shot Prompting: This method involves providing the AI with a few examples of what you desire before making your actual request. It helps it understand the desired style, format, and complexity.

* *Example:* `"Here are two examples of how I format my functions... now write a new one that calculates a factorial."`

- Chain-of-Thought (CoT) for Code: This is where you instruct the AI’s reasoning process. You don’t just ask for the final code; you outline the steps, logic, or considerations it should follow to produce the code.

* *Example:* `"First, explain the logic for handling API rate limits. Then, write a Python function that implements this logic using an exponential backoff strategy."`

How to Design a Guided Prompt: A 5-Step Blueprint

To transition from generic prompts to highly targeted ones, you need a consistent process. This five-step blueprint will ensure that the AI always provides relevant information, significantly enhancing the quality of your AI-generated code.

-

Define the Persona & Role: Tell the AI who it should be. An AI instructed to act as "a Senior Python Developer specializing in secure APIs" will produce vastly different code than one with no assigned role. Other examples include "a Cybersecurity Analyst," "a DevOps Engineer with expertise in AWS," or "a JavaScript developer focused on front-end performance."

-

Provide Full Context: Give the AI the necessary information to make informed decisions. This includes the goal of the code, existing snippets relevant to the context, data structures (like a JSON object schema), and how the new code will fit into the larger application.

-

Set Clear Constraints & Requirements: Lay down explicit rules for programming language version (

Python 3.9+), required libraries (use 'requests' library, not 'urllib'), libraries to avoid, and performance requirements (e.g., "the algorithm's time complexity must be O(n log n) or better").

Specify the Output Format: Clearly state what format you require for the output, whether it's raw code, JSON, Markdown with explanations, or code with specific comment styles (e.g., "add a docstring in Google's Python style").

Anatomy of an Ideal Instruction: Superior vs. Inferior Examples

Let’s observe how a 5-step blueprint performs under different conditions. The contrast in output quality between a vague instruction and one with clear guidance is striking.

| Category | Bad Instruction (Vague) | Good Instruction (Guided) | Analysis of the Improvement |

|---|---|---|---|

| Function Generation | "Create a script to fetch user data from an API." | "Act as a seasoned Python developer. Write a single function named getUserProfilethat accepts auserId(integer) and anapiKey(string). Use therequestslibrary for making GET requests. Include a Bearer token in the 'Authorization' header of your request. Implement comprehensive error handling, specifically addressing 404 (Not Found) and 500 (Server Error) responses by raising a customAPIError. The function should return a JSON object on success." | The good instruction specifies the persona, function signature, libraries, endpoint, authentication method, and precise error handling, ensuring predictable and robust code that's ready for deployment. |

The developer's prompt library contains seven pre-made templates to assist with common coding tasks. These templates serve as a foundation for creating tailored prompts that enhance productivity and maintainability.

CODE_BLOCK 1: The 'New Feature' Prompt

- Use Case: Creating a new feature from scratch.

# The 'New Feature' Prompt

Act as a [PERSONA, e.g., Senior Go Developer].

I am writing a new feature to [ULTIMATE GOAL OF THE FEATURE, e.g., process user uploads]. Your task is to write a function named `[FUNCTION_NAME]` that fits within the following context.

**Context:**

[Provide surrounding code, data structures, or class definitions here. e.g., "This function will be a method of the `FileManager` class shown below..."]

**Requirements:**

- Language: [e.g., Go 1.18]

- Function Signature: `[e.g., func UploadFile(ctx context.Context, file io.Reader, bucket string) (string, error)]`

- Libraries: [e.g., Must use the official AWS S3 SDK for Go v2]

- Business Logic:

1. [e.g., Generate a unique UUID for the file name.]

2. [e.g., Upload the file to the specified S3 bucket.]

3. [e.g., On success, return the object URL; on failure, return a descriptive error.]

**Output Format:**

Provide only the raw code for the function, with comments explaining any complex logic.

CODE_BLOCK 2: The 'Code Refactor' Prompt

- Use Case: Improving existing code for readability, performance, or style adherence.

# The 'Code Refactor' Prompt

Act as a [PERSONA, e.g., Expert JavaScript Engineer] with a focus on performance and code clarity.

Refactor the following code snippet to achieve these specific goals:

1. **[GOAL 1, e.g., Improve readability by replacing the nested Promises with async/await syntax.]**

2. **[GOAL 2, e.g., Increase efficiency by running the API calls in parallel using `Promise.all`.]**

3. **[GOAL 3, e.g., Ensure the code adheres to the [Airbnb JavaScript Style Guide](https://github.com/airbnb/javascript).]

**Original Code:**

```javascript

[Paste your messy code snippet here]

Output Format: Return a Markdown block containing the refactored code. Below the code, add a brief explanation of the key changes you made and why they improve the code.

### **CODE_BLOCK 3: The 'Bug Hunter' Prompt**

* **Use Case:** Debugging a snippet of code and asking for an explanation of the fix.

The Bug Hunter

You are [PERSONA, e.g., Senior Java Developer] with extensive experience in debugging. Your task is to identify and correct bugs in the provided code snippet.

Problematic Code:

[Paste your buggy code snippet here]

Task:

- Identify the root cause of the bug.

- Provide a corrected version of the code that fixes the bug.

- Explain why the bug occurred and how your fix resolves it.

Output Format: Structure your response in three parts: "Root Cause", "Corrected Code", and "Explanation".

### **CODE_BLOCK 4: The 'Unit Test Writer' Prompt**

* **Use Case:** Generating comprehensive unit tests for an existing function.

The 'Unit Test Writer' Prompt

Act as a [PERSONA, e.g., QA Engineer specializing in automated testing].

Your task is to write a comprehensive suite of unit tests for the following [LANGUAGE] function using the [TESTING FRAMEWORK, e.g., pytest for Python or Jest for JavaScript].

Function to Test:

[Paste the function you want to test here]

Requirements:

- Write at least 3-5 test cases.

- Cover the following scenarios:

- The "happy path" with valid inputs.

- Edge cases (e.g., empty lists, zero, null inputs).

- Expected failure cases (e.g., invalid input should raise a

ValueError).

- Use mocks where necessary to isolate the function from external dependencies like APIs or databases.

Output Format: Provide a single code block containing the complete test file.

### **CODE_BLOCK 5: The 'Code Documentation' Prompt**

* **Use Case:** Creating docstrings, comments, or even full Markdown documentation for a piece of code.

The 'Code Documentation' Prompt

Act as a [PERSONA, e.g., Technical Writer] who is an expert in documenting code for other developers.

Generate documentation for the following [LANGUAGE] code snippet. The documentation must be in the [DOCUMENTATION FORMAT, e.g., JSDoc, Google-style Python docstrings, or a Markdown file].

Code to Document:

[Paste your code snippet here]

Requirements:

- Document the purpose of the function/class.

- Explain each parameter (name, type, description).

- Describe the return value (type, description).

- Include a simple, clear code example showing how to use the function.

- Mention any exceptions or errors that the function might throw.

Output Format: Return the original code block, but with the requested documentation added.

### **CODE_BLOCK 6: The 'Enforce Coding Standards' Prompt**

* **Use Case:** Rewriting code to strictly follow a specific style guide (e.g., PEP 8 for Python, Google Java Style Guide).

The 'Enforce Coding Standards' Prompt

Act as an automated linter and code formatter.

Rewrite the following [LANGUAGE] code to strictly conform to the STYLE GUIDE, e.g., PEP 8 for Python, Google Java Style Guide.

Original Code:

[Paste your non-compliant code here]

Task: Correct all violations related to naming conventions, indentation, line spacing, import ordering, and any other rules specified in the style guide. Do not change the logic of the code.

Output Format: Provide only the clean, reformatted code.

### **CODE_BLOCK 7: The 'Algorithm Explainer' Prompt**

* **Use Case:** Asking the AI to explain a complex piece of code in simple terms, including its time and space complexity.

The 'Algorithm Explainer' Prompt

Act as a [PERSONA, e.g., Computer Science Professor] who is skilled at explaining complex topics simply.

Analyze the following [LANGUAGE] function and provide a clear explanation of what it does.

Code for Analysis:

[Paste your complex algorithm or code here]

Task:

- Provide a high-level summary of the algorithm's purpose.

- Give a step-by-step, line-by-line explanation of the code's logic in plain English.

- Calculate and explain the Time Complexity (Big O notation) of the algorithm.

- Calculate and explain the Space Complexity (Big O notation) of the algorithm.

Output Format: Use Markdown headings for each section: "Summary", "Step-by-Step Logic", "Time Complexity", and "Space Complexity".

Advanced Prompting Techniques for AI Coding Assistants

Once you've mastered the 5-step blueprint, you can start using more advanced techniques to tackle highly complex tasks. These methods require more interaction but can yield incredibly powerful results.

-

Chain of Density: This technique involves iteratively refining a prompt. You start with a simple request and then, in subsequent prompts, ask the AI to add more detail, constraints, or features. For example, you might first generate a basic function, then ask it to

"add logging", then"refactor to use a specific design pattern", and finally"add comprehensive error handling for network failures". This builds a complex piece of code step-by-step, ensuring quality at each stage. -

Self-Correction: This is like forcing the AI to perform its own code review. After the AI generates a piece of code, you can prompt it with:

"Critique the code you just wrote. Identify any potential bugs, style violations, or areas for improvement. Then, provide a new version that fixes these issues."This forces the model to re-evaluate its own output and often catches subtle errors you might have missed. -

Integrating with Tools: The true potential of guided prompts is unlocked when they are integrated within your existing workflow. Modern Integrated Development Environments (IDEs) have extensions and AI coding tools that allow you to save and reuse complex prompts. Rather than typing them out, you can create a library of your best prompts (like the ones above) and apply them to your code with just one click. This is where the engineering approach truly pays off, turning a manual process into a repeatable, automated one.

Common Errors to Avoid When Crafting Code Instructions

When you adopt a more structured method, be aware of these typical mistakes. By avoiding them, you'll save time and reduce frustration.

- Including Outdated Code: If you're providing context, ensure the code snippets included are up-to-date. Using an old version of a function can lead to incompatible code.

- Forgetting to Specify Versions: Always specify the language, framework, or library versions you're using. The difference between

Python 3.7andPython 3.10is significant, and the AI needs to know which syntax and features are available. - Asking for Too Much at Once: Don't try to generate an entire application in a single prompt. Break down large problems into smaller, more manageable functions or classes. A prompt that's too complex will often result in generic, low-quality code.

- Not Verifying the AI's Output: This is the golden rule. Always treat AI-generated code as a draft from a junior developer that requires senior review. Never copy-paste code directly into production without thoroughly testing and understanding it first.

For developers short on time, here’s the bottom line: moving from basic, conversational prompts to a structured, engineering-based approach is crucial for obtaining consistent, high-quality code from AI assistants. This guide offers a practical blueprint for achieving this goal. By treating your prompts as detailed specifications rather than simple requests, you can minimize frustrating bugs, enforce coding standards, and significantly speed up your development process. This abstract encapsulates the fundamental principles and actionable takeaways from the comprehensive guide.

Key Points:

- Change from Asking Questions to Directing: The main idea is shifting from simple inquiries to providing detailed, engineering-style instructions. You're not just asking for code; you are directing the AI with the precision of a project manager.

- The 5-Step Blueprint: For any significant code generation task, follow this reliable sequence: define the Persona → provide full Context → set clear Constraints → specify the output Format → refine with Examples.

- Templates are Speed Boosters: Use the provided prompt library as a starting point for all common coding tasks. They are battle-tested frameworks for generating features, refactoring code, writing unit tests, and more.

- Verification is Essential: Always treat AI-generated code as a draft from an experienced but inexperienced junior developer. It requires a senior-level review to catch subtle bugs, security flaws, and logical errors before it ever touches production.

Conclusion: Begin Engineering Your Prompts Today

The distinction between a frustrating coding session with an AI and one that's highly productive often hinges on a subtle shift in perspective: stop asking, and start directing. By treating your AI coding assistant less as a magic eight-ball and more like a junior developer who needs precise instructions, you unlock consistent, high-quality results. The era of the 'lazy prompt' is over; the era of the prompt engineer has arrived.

This guide offers a blueprint—from the 5-step process for building a guided prompt to a library of copy-paste templates for your most common tasks. Think of these tools not as rigid rules but as a framework for creativity and precision. The real power lies in adapting them to your unique projects, team's coding standards, and personal workflow.

So, the next time you open your IDE, don't just ask for a function. Engineer it. Grab one of the templates from this guide, modify it for your specific challenge, and see the difference for yourself. We'd love to hear about your successes—share your experiences and favorite prompt templates in the comments below!

Q1: How can I make my AI-generated code more consistent across an entire project?

This is a classic problem in software development, often referred to as "code consistency." A seasoned developer knows that creating a "project constitution" prompt before starting any new project can significantly improve the quality of the generated code. The key is to define the context and guidelines for the project.

Include details like:

- The Persona: "Act as a senior TypeScript developer who strictly follows the Google TypeScript Style Guide."

- The Stack: "We are using Next.js 14, Tailwind CSS, and Prisma for the ORM."

- Core Principles: "All functions must be pure where possible, and components should follow the container/presentational pattern."

Save this "constitution" and paste it at the beginning of every new conversation or prompt related to that project. This ensures the AI always has the same foundational instructions, leading to far more consistent style, architecture, and library usage.

Absolutely! This is a great scenario for guided prompts. Instead of just saying, "Write code for this API," you provide a detailed specification:

- The relevant part of the API documentation: Copy-paste the endpoint definition, expected request body, and example response.

- Your local data structures: Show the AI the shape of the data in your application.

- A precise goal: "Write a Python function

fetch_user_data(user_id)that makes a GET request to the/api/v1/users/{id}endpoint. It must use therequestslibrary, include anAuthorization: Bearer <token>header, and handle potential 404 or 500 errors gracefully by returningNone.

This transforms the AI from a guesser into a focused tool that maps the API's requirements directly onto your codebase.

Q3: Which AI code generation tools perform well with guided prompts?

Most modern large language models (like GPT-4, Claude 3, and Gemini) that power today's AI tools excel at interpreting long, detailed instructions. The effectiveness often hinges less on the specific model and more on the user interface.

Tools that support a wide context window (the amount of text you can input all at once) are naturally well-suited. Additionally, integrated development environment (IDE) extensions and dedicated platforms that allow you to save, manage, and reuse complex prompts as templates (such as PromptPilot's 'Guide Pilot' available at https://promptpilot.online/guide) are incredibly powerful because they turn the best practices we've discussed into a repeatable, one-click workflow.

Q4: How does this differ from just using GitHub Copilot's autocomplete?

It’s a difference in strategy versus tactics. Think of GitHub Copilot’s autocomplete as a brilliant pair programmer who finishes your sentences. It’s incredibly fast and effective for in-the-moment tasks—completing a line, suggesting the body of a simple function based on its name, or boilerplate code. It’s reactive and works on the immediate context of your open file.

Guided prompting is like a technical architect. You use it for more deliberate, high-level tasks. You don’t ask an architect to finish your line of code; you ask them to design a new module, refactor a complex system according to new standards, or generate a full suite of unit tests with specific mocking requirements. Copilot is for accelerating the writing of code, while guided prompts are for architecting and engineering the code.

Yes, there is a risk that AI-generated code could introduce security flaws. Remember to view AI-generated code as a junior developer's contribution—it’s great but requires senior review.

Guided prompts can significantly reduce this risk by making your requests more specific and including security requirements directly. For example:

- "Write a SQL query using the

pglibrary in Node.js, ensuring all user inputs are parameterized to prevent SQL injection." - "Create a file upload handler in Python with Flask, validating file types and sizes while sanitizing filenames to avoid directory traversal attacks."

While this reduces common errors, it doesn’t eliminate the risk. The final responsibility for auditing, testing, and securing the code always falls on human developers.